As artificial intelligence (AI) systems become more powerful and prevalent, it becomes more urgent that we understand and prepare for the rise of digital minds, digital systems that appear to have mental faculties such as reasoning, emotion, and agency. This urgency has been at the top of our minds over the past year with accelerating AI developments.

Whether one thinks the next decade will see artificial general intelligence (AGI)—defined as human-level performance on every cognitive task—or only incremental improvements on today’s systems, we believe society will soon coexist with a new class of beings, and this will bring radical social transformation. Our research focuses on building a sufficient understanding of these changes to navigate the transformation in a way that benefits all sentient beings.

Our 2024 end-of-year blog post will be short because our noses are to the grindstone. We are immensely grateful to our generous supporters who have made this work possible. This giving season, we particularly appreciate your donation because our focus on research has left us with limited time for fundraising.

We will conclude with a brief list of some of our recently completed research, which includes both the agency (i.e., ability to take action) and experience or patiency (i.e., ability to be acted upon) of digital minds.

AIMS 2023 and 2024

We continue to run our longitudinal and nationally representative survey of U.S. adults on Artificial Intelligence, Morality, and Sentience (AIMS), which was recently discussed in New Scientist. Based on our 2023 supplement with 111 questions, we published a blog post on AI Policy Insights from the AIMS Survey. We have been fielding the next wave of this research in November and December of 2024, and we have multiple papers under review based on the initial AIMS data.

Robot autonomy

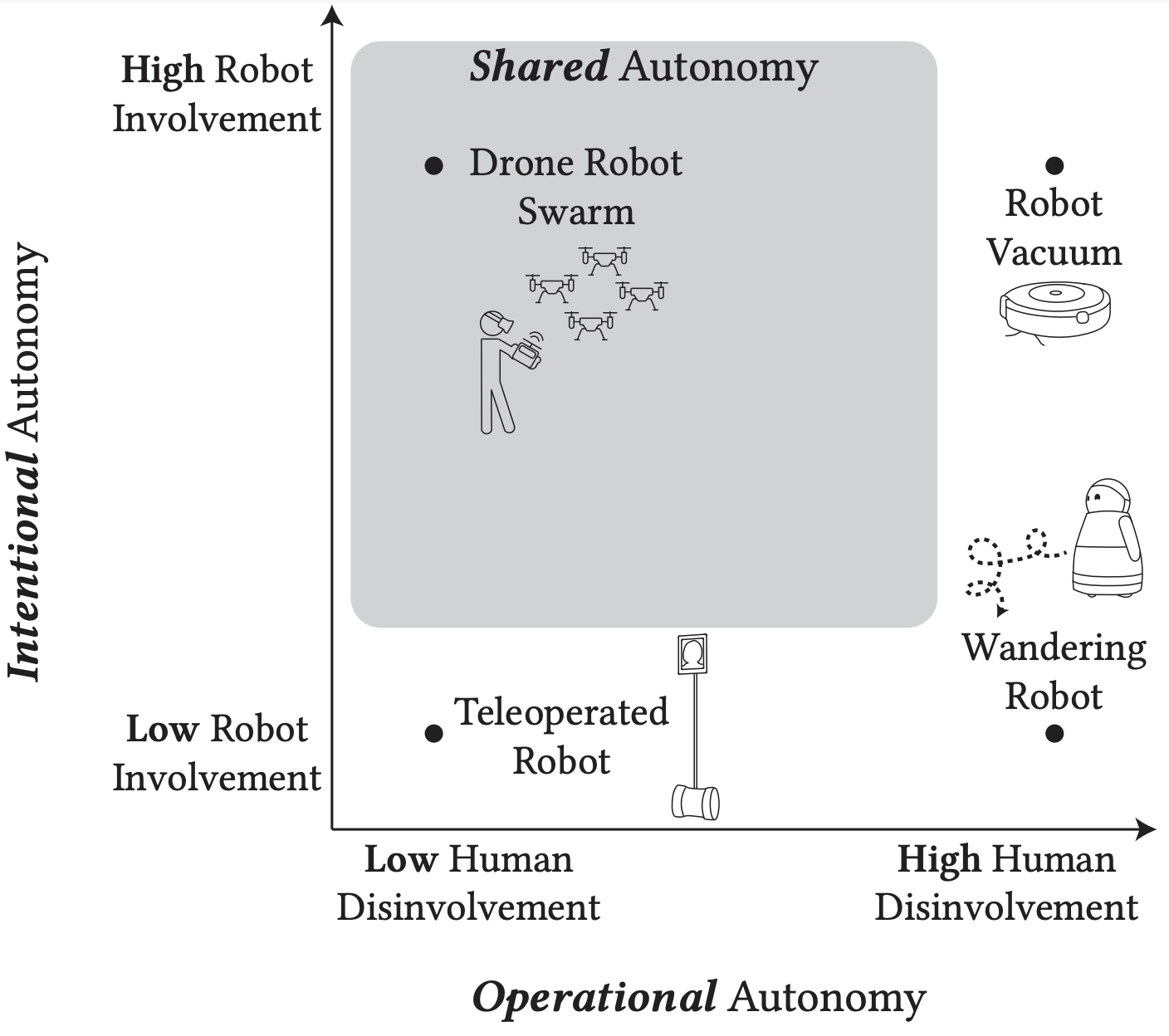

In May, we published a paper, “A Taxonomy of Robot Autonomy for Human-Robot Interaction,” in collaboration with the Human-Robot Interaction (HRI) lab at the University of Chicago. The big-picture perspective on this work is that current evaluations and measures of AI capabilities have become insufficient for modern AI (and certainly near-future AI), so we begin with a small step by upgrading one of the most straightforward notions of human-AI interaction, autonomy. The paper is in the proceedings of the conference entitled HRI, which is the top peer-reviewed venue for HRI research.

Here is an example of the framework, in which the unidimensional axis of autonomy is disentangled into two: the robot’s own intentional autonomy, and the human-dependent operational autonomy.

AI effects on moral consideration

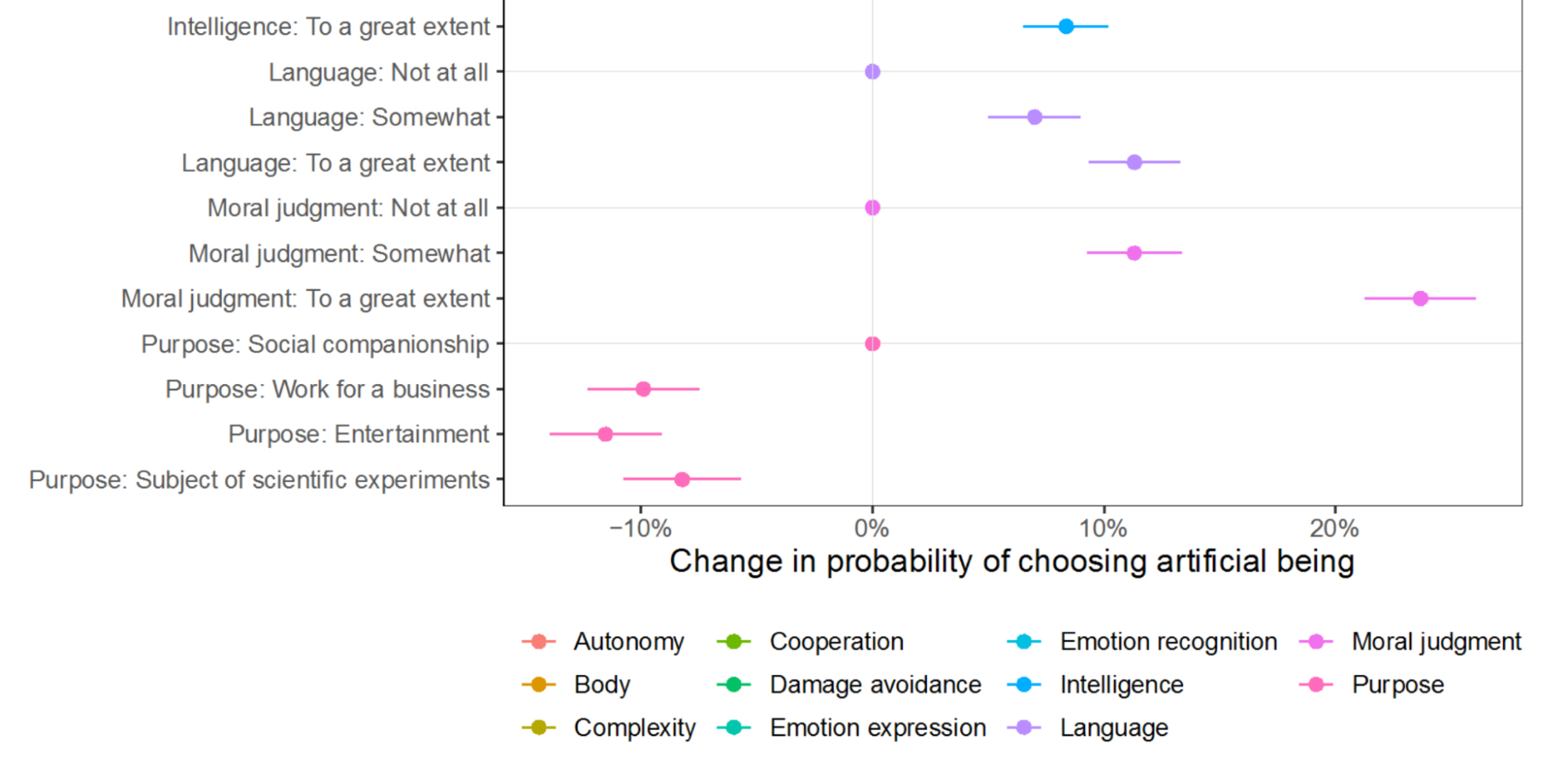

In May, we also published a paper, “Which Artificial Intelligences Do People Care About Most? A Conjoint Experiment on Moral Consideration.” This paper, published in CHI (a top peer-reviewed venue in human-computer interaction research), complements our 2022 paper that tested the human factors that affect moral consideration of AIs. Here, we instead test the effects of 11 features of the AI, finding that their physical body and prosociality have the largest effects on moral consideration. This work has important implications for the design of systems that we expect to develop morally relevant capacities.

Here is a partial figure of the results, identifying the effect of each feature listed on the y-axis:

Spillover and double standards

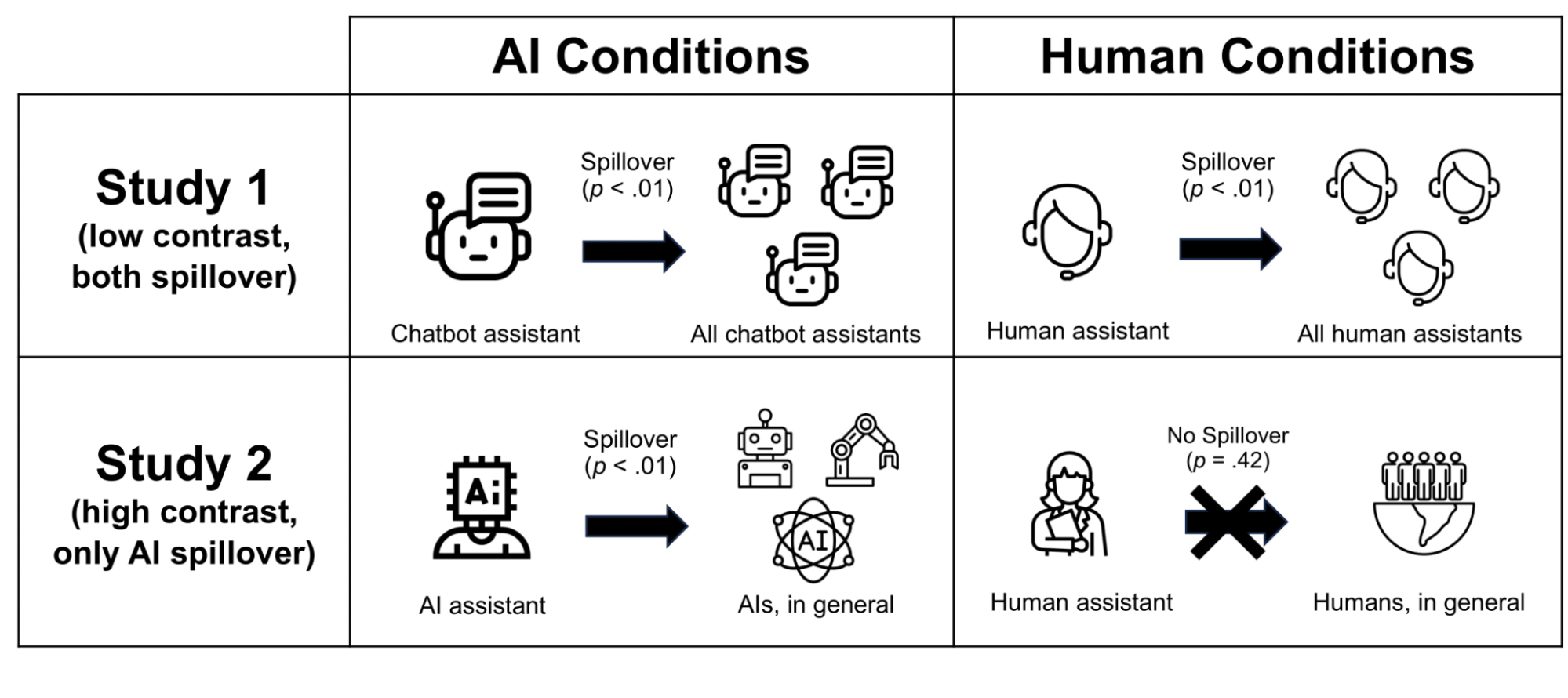

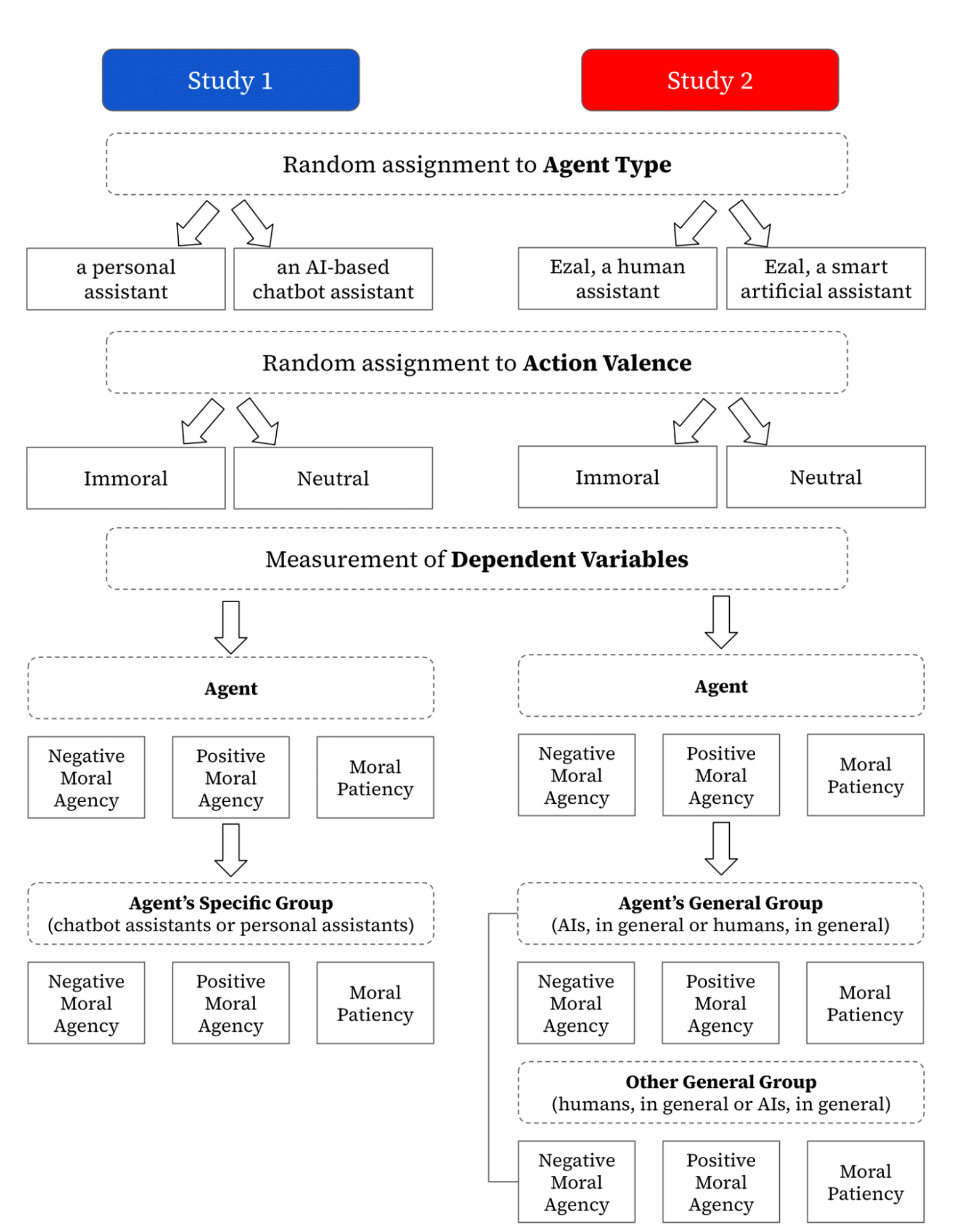

Our forthcoming paper in CSCW shows The AI Double Standard: Humans Judge All AIs for the Actions of One In two human subjects experiments, we present a scenario in which an assistant (human or AI) has committed a moral wrong, and we measure attitudes towards the assistant and their group (human or AI). We find that in both experiments, humans judged the AI group for the actions of one, but in only one of the experiments do participants judge the human group for the actions of one. We term this difference in moral spillover “the AI double standard” and discuss how quick judgments about all AIs could shape the trajectory of how digital minds are introduced into human society.

Here is a summary of the two studies and their findings:

And here is a summary of the methodology of the two studies: