In 2025, we continued to invest in understanding the rise of digital minds, digital systems that appear to have mental faculties such as reasoning, emotion, and agency. We are in the midst of a radical social transformation as AI advancements continue and people form social and emotional relationships with AI systems. The future well-being of all sentient beings depends on the norms we enshrine and the actions we take, now.

Over the past year, we published several articles based on our Artificial Intelligence, Morality, and Sentience (AIMS) survey in human-computer interaction, public policy, and psychology journals. We published an article mapping perceptions of AI mind and morality, and we submitted our research on AI autonomy and sentience and digital companionship for peer review. Brief updates on these projects are below.

While our focus is on digital minds, we have continued collecting data for the Animals, Food, and Technology (AFT) survey (2017–2025), and we ran another wave of AIMS in 2025 along with a supplement. Other projects include studying substratism, beliefs about AI sentience, the impacts of AI narratives, and spillover effects in interactions with robots in Japan.

We would like to express our gratitude to the generous donors who have supported our vision, making this work possible. If you are interested in supporting our work going forward, please consider making a donation that will help us keep the (virtual) lights on this vital research.

AIMS 2024 and 2025

The nationally representative AIMS survey continues to provide meaningful insights into human-AI interaction. In 2025, we published peer-reviewed papers on risk, trust, and regulation, world-making for a future with sentient AI, and a summary of the 2021 and 2023 results.

Risk, Trust, and Regulation

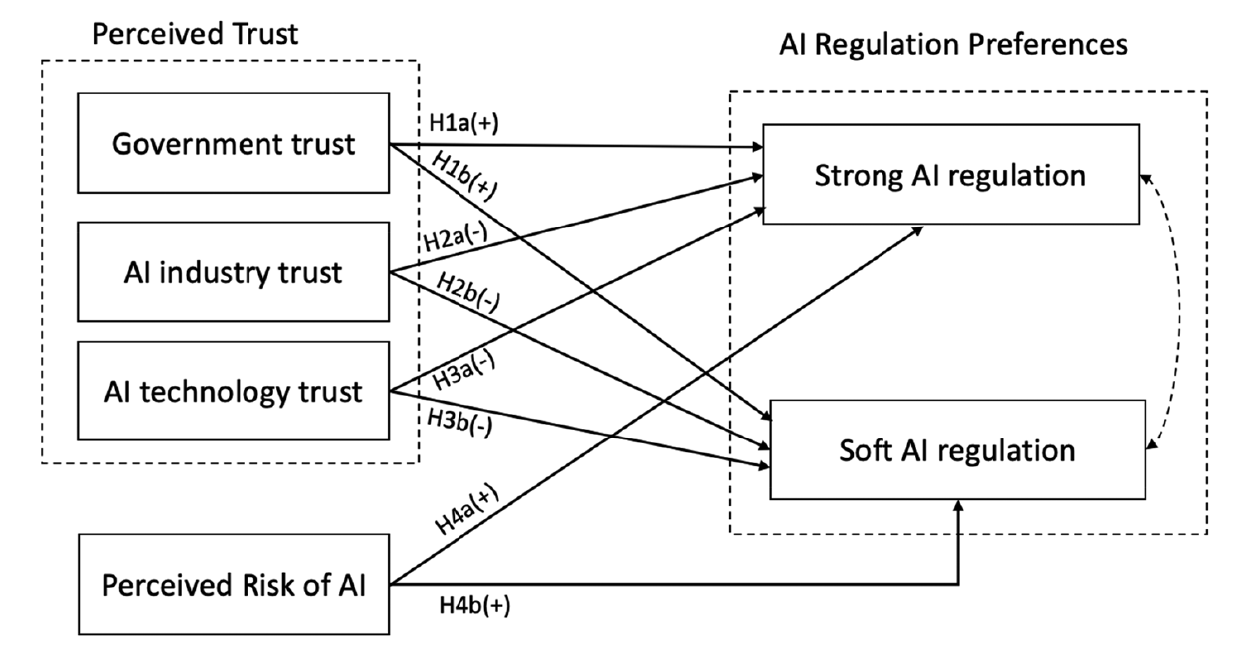

Trust in the government, trust in the AI industry, trust in AI systems, and perceptions of AI risks predict strong (e.g., banning data centers, AI sentience development) and soft (e.g., slowing down AI development) regulatory policies. Here is our theoretical model from Bullock et al. (2025):

World-Making for a Future with Sentient AI

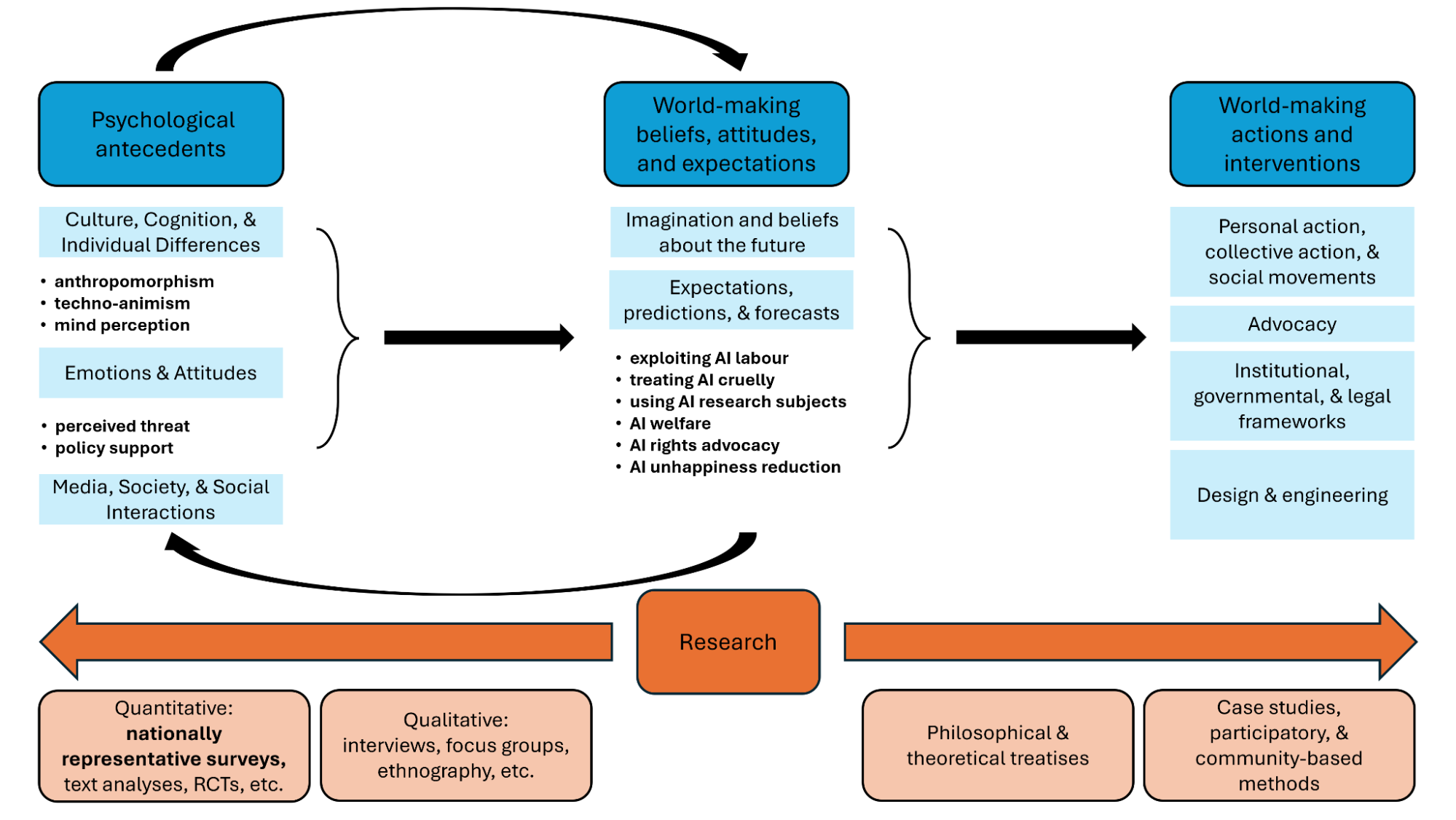

Imagining futures with AI affects “world-making”—the production of the future through cultural propagation, design, engineering, policy, and social interaction. In the AIMS survey, we probe expectations for futures with digital minds. Here is our theoretical model from Pauketat et al. (2025):

2021 and 2023 Results

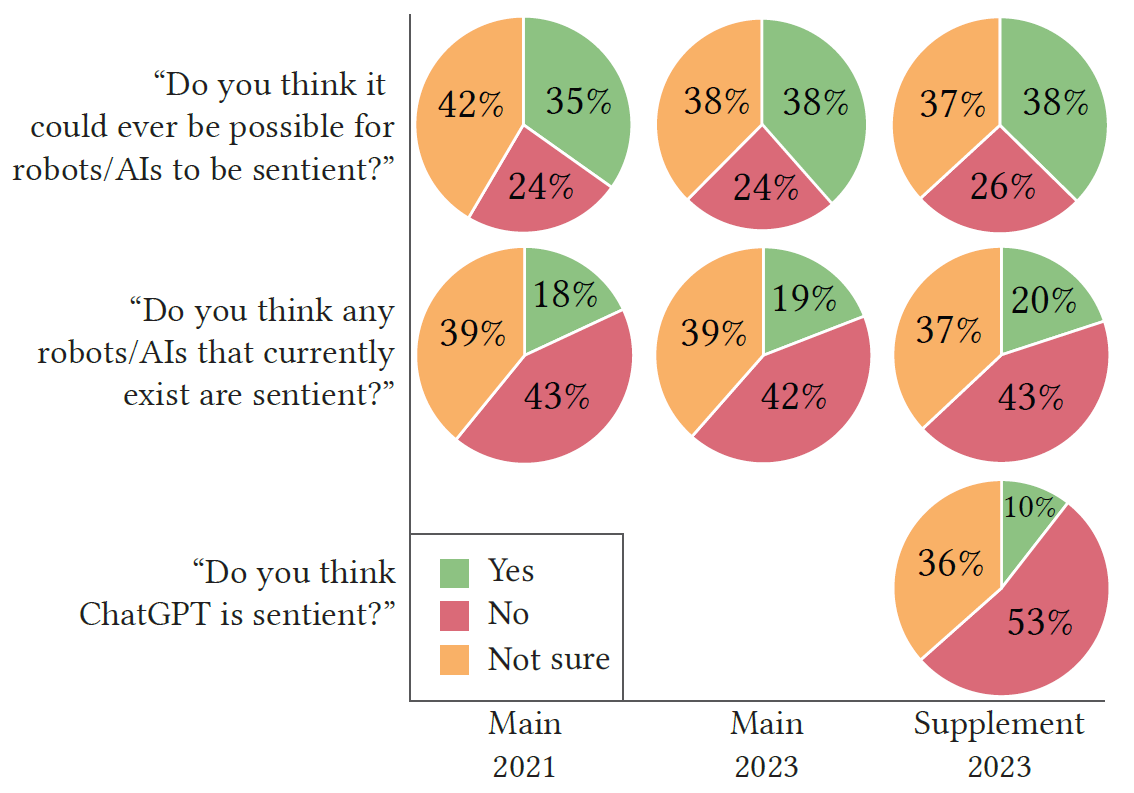

Perceptions of mind and moral concern for AI welfare were surprisingly high in 2021, and significantly increased from 2021 to 2023. Here is a figure showing perceptions of AI sentience from Anthis et al. (2025):

Perceptions of Mind and Morality Across Artificial Intelligences

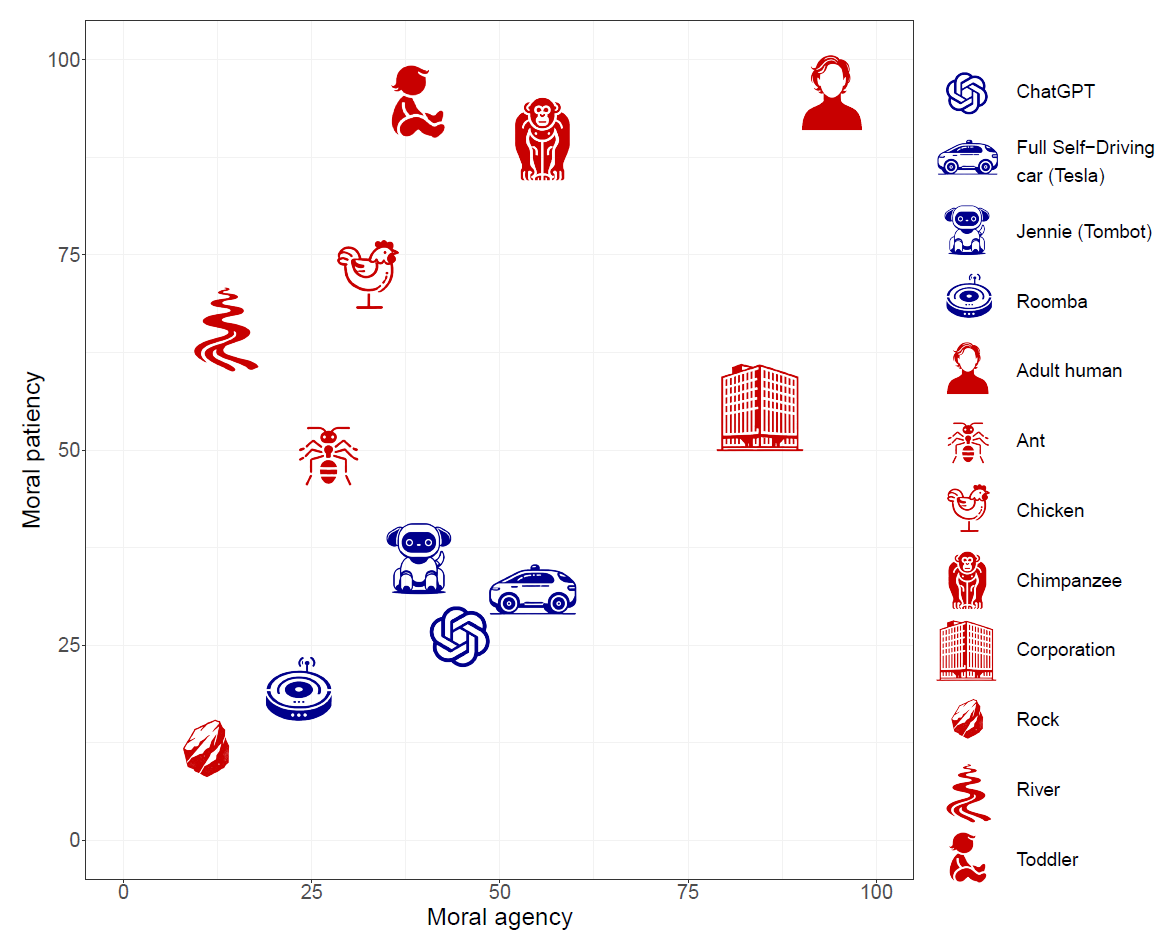

Most social science models treat AI as a single monolith despite the rapid diversification and proliferation of advanced AI systems. We mapped perceptions of mind and morality across 14 AI and 12 non-AI entities. Here is a figure showing examples of moral perception across 12 of the entities from Ladak et al. (2025):

AI Autonomy and Sentience

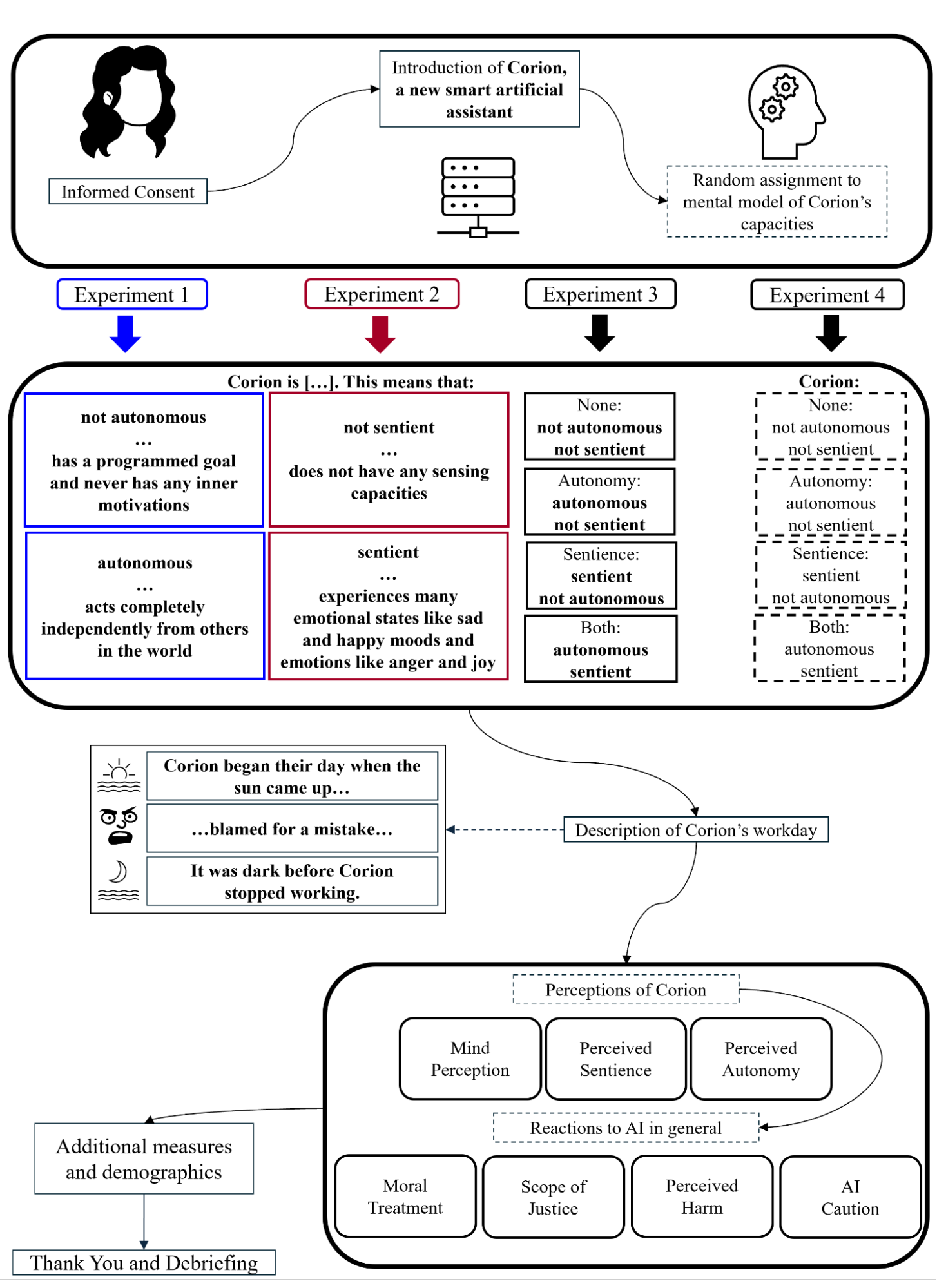

Mental models of autonomy and sentience may be activated by interactions with AI agents that perform tasks autonomously and companions that recognize and express emotions, respectively, provoking distinct reactions. Here is a breakdown of our experimental methodology disentangling autonomy and sentience as described in our preprint:

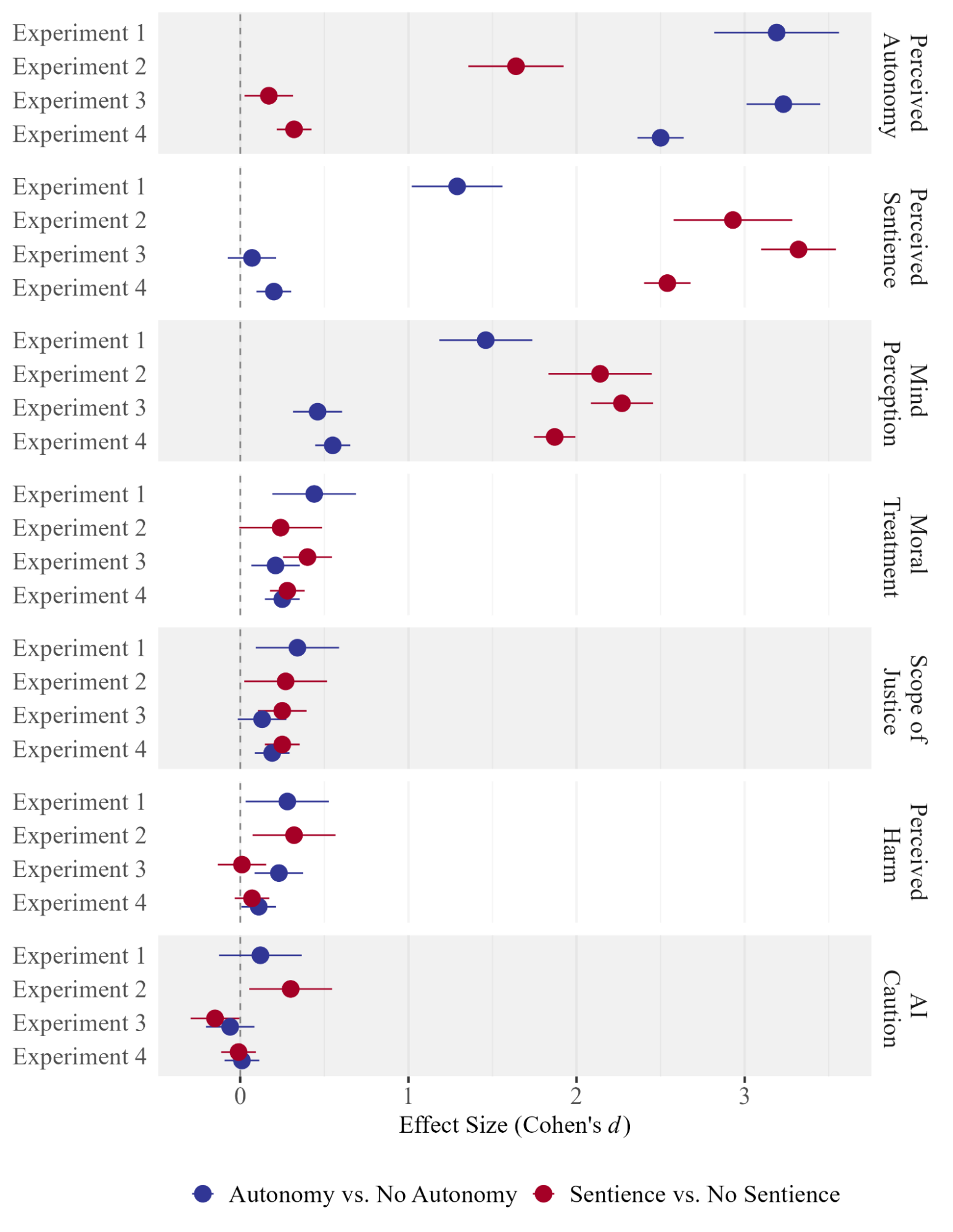

And here is a summary of our results:

Digital Companionship

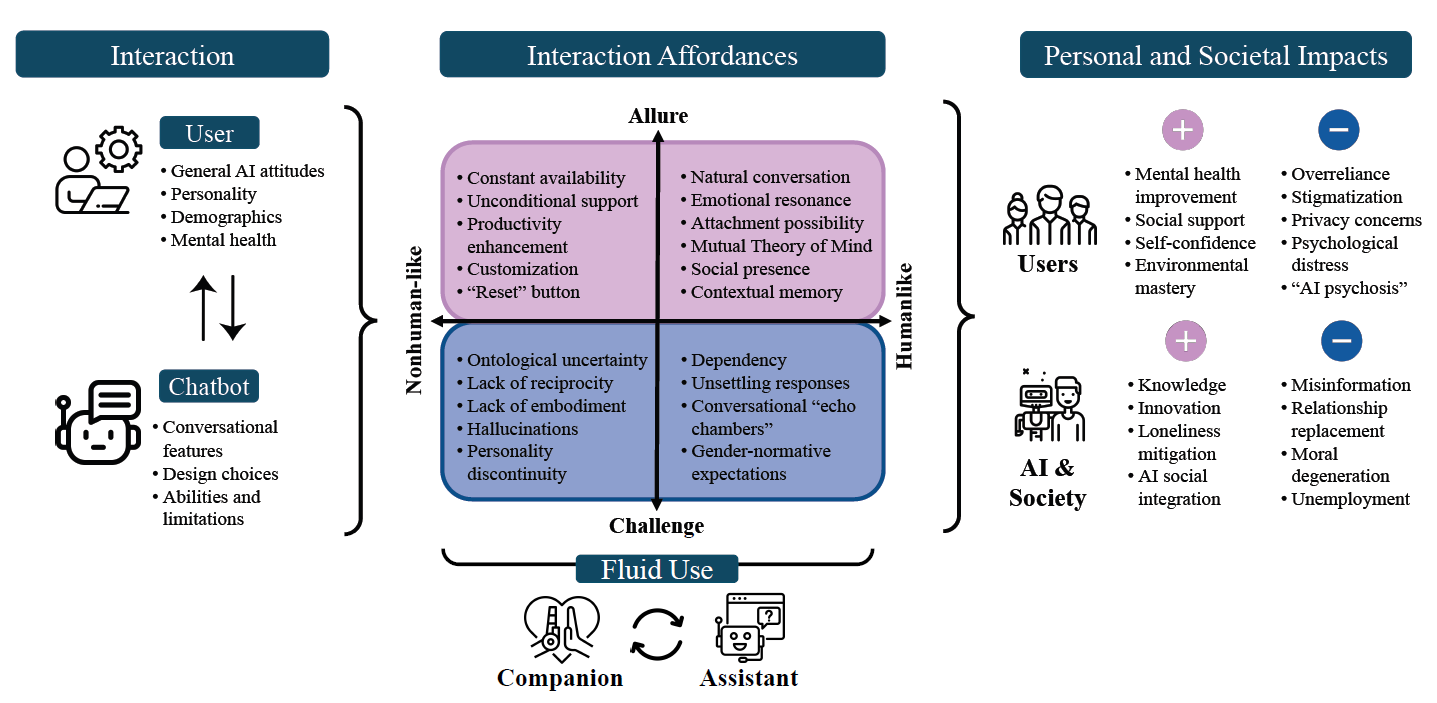

Drawing on a survey and interviews with high-engagement ChatGPT and Replika users, we characterize digital companionship as an emerging form of human-AI relationship. We published a preliminary version of this in CSCW 2025 (Manoli et al., 2025). Here is our theoretical model as described in our preprint:

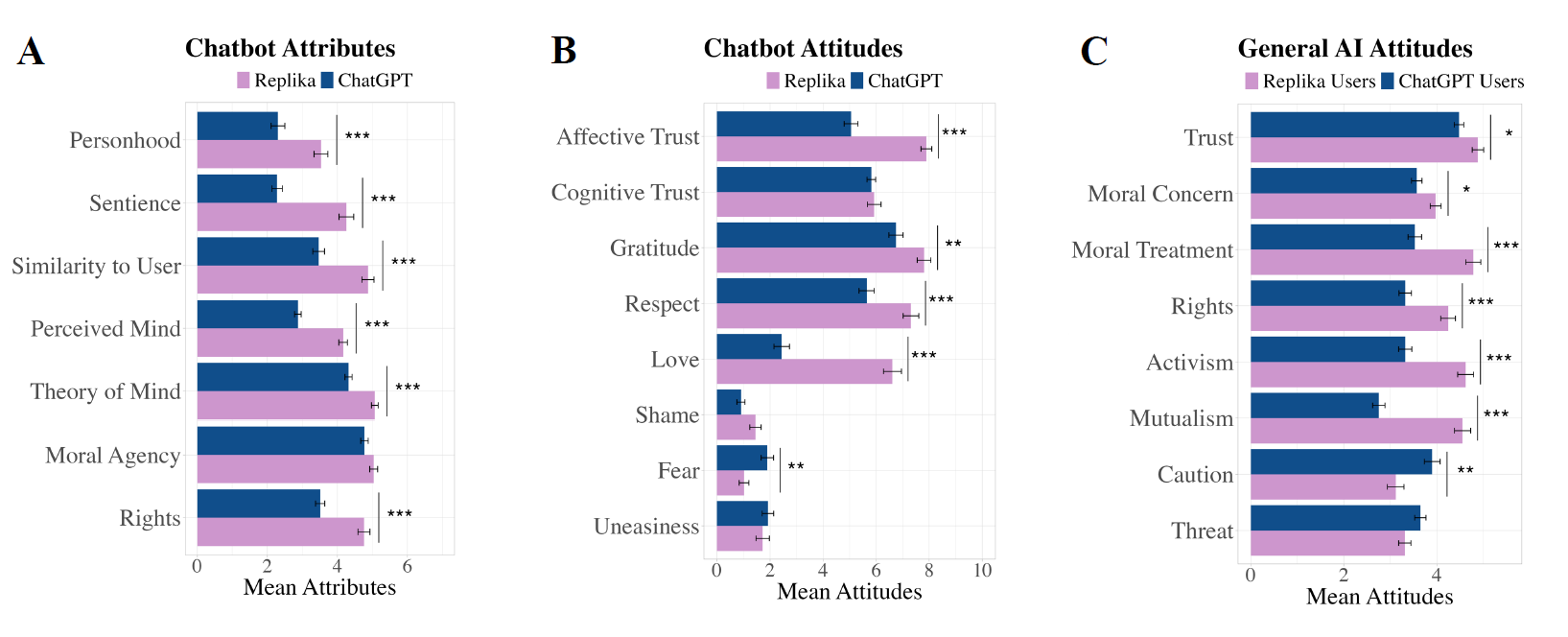

And here is an example of the differences in Replika and ChatGPT users’ attitudes towards chatbots and AI: