Sometimes critics in EAA discussions assert that if a piece of evidence is weak, it counts for nothing or the conclusion it points to is wrong, and some critics make statements that there is absolutely no evidence for a position.[1] While evidence strength is worth discussing, these tactics are unhealthy and unproductive because the weaknesses of evidence is not by itself an argument against a conclusion. To argue against a conclusion, as opposed to just showing that we should have limited confidence in it, a debater needs to either (i) bring up additional, stronger evidence that weighs against the conclusion or (ii) argue that the initial evidence is actually evidence against the purported conclusion, not in favor of it.

For example, say someone thinks that handing out vegan leaflets is a more promising intervention than giving talks on humane education because of an experiment on vegan leafleting where the treatment group had 3% lower animal product consumption than a group who watched a humane education talk. Someone else could criticize this study for having a low sample size, potential social desirability bias, and other issues, but it would be wrong of them to dismiss the evidence entirely, unless the study was so biased that its effect size is likely more than 3% greater than it is in reality, in which case a 3% difference would actually be evidence against leafleting’s effectiveness.

Essentially, weakness of evidence does not imply an effect in the opposite direction, or even agnosticism between the two directions. It just means our confidence in the conclusion should be low. Perhaps our concerns about the experiment should make us discount the real effect size all the way down to 0.1%, but even that is still some evidence in favor of leafleting. If we need to make a decision about whether a large charity runs a leafleting or humane education program, and they’re unwilling to choose a third option,[2] then that 0.1% difference could make a difference of thousands or more animals.

Both parties could also present additional evidence. Maybe that additional evidence is just speculation and intuition, which are plausibly more robust evidence than some of the existing experiments in the animal advocacy field. If someone thinks that behavior change based on an intervention as small as a leaflet is very unlikely, they might favor humane education because extended interactions seem to that person to be much more likely to lead to behavior change.

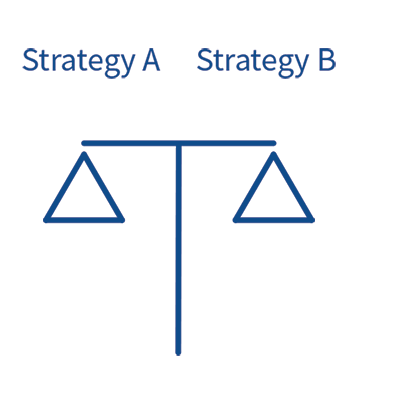

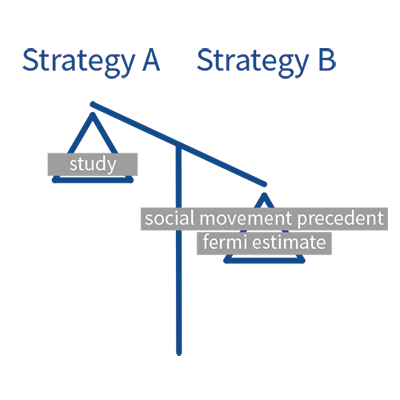

We can visualize this by comparing evidence-based reasoning to using a scale with physical weights, where evidence accumulates on each side of a debate (the left or the right platform of the scale) and our conclusion is based on which side is heavier. So here’s what a debate would look like before we get any evidence:

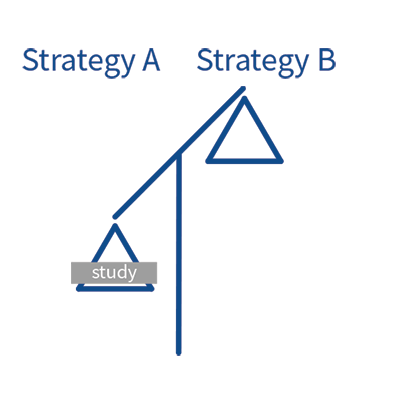

Once we get a piece of evidence, such as a study on a particular strategy, the scale leans towards that conclusion:

What happens when that evidence weakens, perhaps because we find out about a surprisingly low sample size or other methodological concerns?

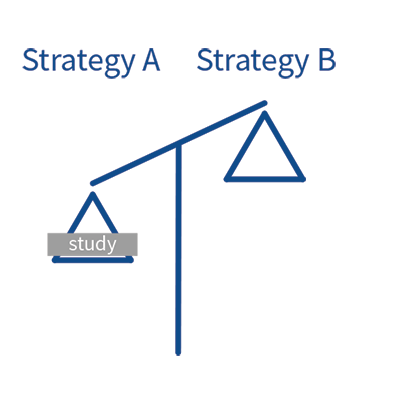

The evidence loses some of its weight, but no evidence was added to the other side of the scale, so it doesn’t tip in the other direction. This is true both for evidence and physical objects — reducing the weight of your only piece of evidence will not make it lean in the other direction. What happens when you add more evidence, or more physical objects?

Note: A Fermi estimate is the result of a calculation that combines several intuitive/speculative estimates to guess the figure we’re interested in, e.g. the cost-effectiveness of the intervention.

The scale analogy is useful for visualization, but it’s usually inaccurate in an important way because there often aren’t just two places where evidence can sit, e.g. the two platforms on a scale. In the real world, evidence can sit on different points across a single, wide platform. You could have one study that suggests a 4% increase in vegetarianism, a 2% increase, and a -5% increase. You would then have weights for each piece of evidence, based on their methodology, design, etc., and would then conclude a certain effect size — in this case 0.33% if the weights are the same.

A good rule-of-thumb in EAA discussions is: If you want to criticize someone’s evidence for being mere speculation, intuition, or otherwise weak, you should either (i) say explicitly that you are just criticizing the explanation of the evidence, e.g. someone says “Leaflets are effective!” and you think it’s more accurate and worth the extra words to say “The evidence weakly weighs in favor of leafleting,” or (ii) introduce additional evidence, and argue why that evidence might be stronger than the critiqued evidence, even if the additional evidence you bring up is also just speculation and intuition.

It’s much easier to criticize the strength of a piece of evidence than to contribute new evidence (for instance, by running a new study). Criticism can also make a person seem intelligent and can help them easily reinforce a position they already held. For these reasons, it seems like the EAA research community should generally spend less time criticizing the strength of evidence and more time seeking out, analyzing, and aggregating new evidence.

But isn’t the strong evidence, e.g. randomized experiments, so much stronger that we should just ignore speculation and intuition, even if should theoretically have non-zero weight?

Unfortunately there is little evidence for effective advocacy strategies that is as strong as, for instance, the best evidence in fields like history and psychology. Even in advocacy areas other than helping animals that have been the subject of more exhaustive scientific study, such as increasing voter turnout, many strategic decisions still rely heavily on speculation and intuition because we simply can’t run randomized controlled trials or similarly conclusive studies on all the outcomes we care about.[3]

If we measure immediate conversion to vegetarianism in animal advocacy, or individual voter turnout in a Get Out The Vote campaign, we’re still not seeing outcomes like long-term diet change, activist creation, or the impact of subjects on their communities and society as a whole, such as through media coverage. We have to rely on weaker evidence for those outcomes. Even if we decide to exclude those outcomes because the evidence is so weak, that decision to exclude is itself a speculative and weakly evidenced judgment.

We’ve aggregated a lot of evidence on important effective animal advocacy questions, and we’re excited to produce new evidence that advocates can use to make more informed judgements about their advocacy. The evidence we are able to acquire might not be strong enough to give us total confidence in our judgement calls, but we have use what we have in order to do as much good as possible.

[1] While such a statement could be correct in theory, such as the position that the sky is red (and even this might have some evidence!), it seems to be demonstrably false in every common EAA debate.

[2] While this might seem like an artificial stipulation, it is quite common for large organizations and donors in the animal space to be unwilling to choose third options like funding further research.

[3] There are still plenty of challenges faced by RCTs, as has been discussed at length in the global poverty and health field. See, for example, RCTs: Not All That Glitters Is Gold.