Edited by Jacy Reese Anthis and Ali Ladak. Many thanks to Thomas Moynihan, Tobias Baumann, and Teo Ajantaival for reviewing and providing feedback. This article is also available and referenceable on the Open Science Framework: https://doi.org/10.31234/osf.io/sujwf

Abstract

We consider the terminology used to describe artificial entities and how this terminology may affect the moral consideration of artificial entities. Different combinations of terms variously emphasize the entity's role, material features, psychological features, and different research perspectives.[1] The ideal term may vary across context, but we favor “artificial sentience” in general, in part because “artificial” is more common in relevant contexts than its near-synonyms, such as “synthetic” and “digital,” and to emphasize the sentient artificial entities who deserve moral consideration. The terms used to define and refer to these entities often take a human perspective by focusing on the benefits and drawbacks to humans. Evaluating the benefits and drawbacks of the terminology to the moral consideration of artificial entities may help to clarify emerging research, improve its impact, and align the interests of sentient artificial entities with the study of artificial intelligence (AI), especially research on AI ethics.

Table of Contents

The importance of conceptual clarity

Terminology and conceptual definitions

Table 1: Terminology defining an entity’s role

Table 2: Terminology defining material features

Table 3: Terminology defining psychological features

Table 4: Consequential combinations of features and role

Reasons for “artificial sentience”

Reasons against “artificial sentience”

Appendix: Terminology defining relevant fields of study

The importance of conceptual clarity

Sentience Institute uses the term “artificial sentience” to describe artificial entities with the capacity for positive and negative experiences. When we survey the public, we use the terms “artificial beings” and “robots/AIs” to refer to “intelligent entities built by humans, such as robots, virtual copies of human brains, or computer programs that solve problems, with or without a physical body, that may exist now or in the future.” We have also used the terms “artificial intelligences” and “artificial entities.” These five terms are just a few of the many used in the design, development, and scholarship of AI.

When a term that expresses a concept is ambiguous, it becomes difficult to disentangle the theoretical connotation intended by the researchers and the meaning of participants’ responses in empirical studies. This reduces the impact of empirical research, especially for concepts that are tied to ordinary language like “artificial.”[2] Researchers may assume that everyone shares their same definitions when in fact they are fuzzy concepts, “[that] possess two or more alternative meanings and thus cannot be reliably identified or applied by different readers or scholars” (Markusen, 2003, p. 702). Markusen argues that this can decrease scholars’ belief in the need for empirical tests, can lead to the devaluation of empirical evidence, and can reduce the credibility of research.

Conceptual ambiguity can lead to concept creep, when a concept becomes so broad and deep that non-examples are difficult to find. The methodological and real-world implications of conceptual ambiguity range from reduced trustworthiness of the research to the detachment of scholarship from policy-making and advocacy. For instance, a policy-maker is more likely to avoid “fuzzy concepts” when implementing policies because there are no clear definitions that can be used to structure their policy. That is, “fuzzy concepts” provide fuzzy guidance on how a policy will affect systemic power distributions, legal structures, and the actions of different people (e.g., advocates, politicians, lay people).

In the following sections, we attempt to include as many of the terms as we could find relevant to the interdisciplinary study of artificial entities’ moral, social, and mental capacities.[3]

Note. We loosely group terms into four categories although several terms could belong to other categories.

Terminology and conceptual definitions

The first set of terms elicits a mental image of an entity’s role in the world. These terms are most frequently used as nouns that can be modified with information about their material or psychological features, although some, like “super” and “transformative,” typically serve as adjectives. “Machine” is used as a noun with feature modifiers (e.g., “digital machine,” “autonomous machine”). However, it is also sometimes used as a feature-like modifier of other features (e.g., “machine intelligence”). Below are terms defining an entity’s role.

Table 1: Terminology defining an entity’s role

The second set of terms evokes a mental image of an artificial entity’s material structure. These terms focus either on what the entity is made from or how they exist. They often modify terms defining an entity’s role (e.g., “artificial agent”). Below are terms defining material features.

Table 2: Terminology defining material features

A third set of terms defines the psychological features of an entity. These terms operationalize abstract and indirectly observable mental phenomena (e.g., intelligence, friendliness). Psychological features are inferred from observing complex criteria agreed upon by experts. For example, we cannot observe “autonomy” directly. We infer “autonomy” from introspection (i.e., self-reports) and behavior. These terms differ from material features because material features can be directly observed. Below are terms defining psychological features.

Table 3: Terminology defining psychological features

Combinations of feature and role terms have been used within and across many fields of study[4] to describe artificial entities. For instance, “friendly AI” has been used to describe AIs who mimic human friendliness and are benign to humans. “Moral machine” has been used to describe machines that make ethical decisions or need to solve moral dilemmas, like self-driving cars. Some of these combinations are more widely used and well-known. Other combinations have stronger implications for taking moral action and receiving moral consideration. Many combinations are used only in specific contexts, based on convenience, or merely as placeholders. Below we outline some combinations that we think are consequential and related to the moral consideration of artificial entities.

Table 4: Consequential combinations of features and role

What term should we use?

Is the same terminology useful for the general public, engineers, scientists, ethicists, lawyers, and policy-makers? There’s some suggestion that the general public and experts differ in their understanding of artificial entities and support for their rights. Would a common, consensual terminology enable more effective interdisciplinary research, policy-making, and advocacy? If having an imprecise definition is preferred, how can differences in terminology be reconciled to maximize the clarity and utility of the terms?

Whether or not terms generalize across contexts might matter. Should we use terminology that can be applied to humans, nonhuman animals, algorithms, and machines? Is it necessary to specify an entity’s role? Several of the consequential combinations do not (e.g., “artificial general intelligence,” “artificial sentience”). Does the psychological feature need to be narrow or broad? “Consciousness” is broad, but it is also “fuzzy” because of the many conceptualizations in current usage. On the other hand, a narrow term like “friendly” only applies to one aspect of an entity’s behavior and may not retain meaning over time and across context.

Below we explain our preference for “artificial sentience,” consider some possible reasons not to use “artificial sentience,” and consider using multiple terms.

Reasons for “artificial sentience”

We favor the term “artificial sentience” for the following linguistic reasons:

- “Artificial sentience” sits in a “Goldilocks Zone” of broad and narrow terminology. “Artificial” is inclusive of many entities and distinguishes entities composed at least partly of non-biological substrates from completely biological entities. “Artificial” is commonly used and understood in relevant contexts. “Sentience” is an aspect of “mind” that can be differentiated from broad and narrow psychological features such as “mind” and “intelligence,” respectively.

- “Artificial sentience,” as a newer term, does not have divergent meanings across time and field of study like the dual meanings that exist for some terms (e.g., “digital mind”). Scholars concerned with the effects of AIs on human society have used “digital mind” to refer to psychological minds that exist purely on a computer or in a digital space. The term is also associated with human brains and the dynamics of digital technologies. In this context, a “digital mind” is a human brain on digital media.

- “Artificial sentience” uses similar language to “artificial intelligence” and is likely to be conceptually associated with it. “Artificial intelligence” has a well-established meaning and is commonly used by the general public and experts. We believe that similarity to the well-known “artificial intelligence” will enable experts and the public alike to grasp the meaning of “artificial sentience” as an “experiential” extension of “artificial intelligence.”

- There is little reason to expect the term “artificial sentience” to be associated with God-like terms such as “machine superintelligence” that reduce moral consideration by dint of being too threatening or tool-like terms such as “smart device” that prompt instrumental mental images. The common conception of artificial entities as technological tools may limit the extent to which they are morally considered and overcoming these associations with existing terminology may be difficult.

We favor the term “artificial sentience” for the following conceptual reasons:

- “Artificial sentience” avoids some of the conceptual difficulties associated with “artificial consciousness.” Some stances within philosophy, including a position held at SI, argue that the construct of “consciousness” and some of its underlying mental circuitry (e.g., how introspection arises from neural and cognitive systems) are particularly ill-defined and difficult to observe.

- “Artificial sentience” is inclusive of entirely non-biological entities, hybrid biological and non-biological entities, and artificial entities originating from evolutionarily biological processes like whole brain emulations. This inclusivity may increase the tractability of advocating for the moral consideration of artificial entities in a way that terms like “non-biological sentience” may not.

- The term “artificial sentience” prioritizes “sentience,” an affective capacity, as the key distinguishing aspect of mind critical to experiential capacities like motivation and affect. Although “mind” encompasses all mental capacities, it is sometimes used to signify only cognition, reasoning, and rationality. This usage of “mind” is often implicitly considered “cold,” controlled, and unemotional, which can lead to mechanistic forms of dehumanization and moral circle exclusion.

- “Sentience” requires features related to having affective experiences (e.g., the capacity to have positive and negative experiences). This definition may make “sentience” less subject to “fuzziness” than other psychological features that are difficult to define and observe like “qualia.”

- “Artificial sentience” may be less threatening to people than terms like “super-beneficiary,” “digital mind,” or “artificial consciousness.” “Super-beneficiary” may prompt realistic threats over resource sharing. “Digital mind” and “artificial consciousness” may prompt symbolic threats to human distinctiveness because of the association of “consciousness” and “mind” with humans’ sophisticated mental capacities. “Artificial sentience” may be less threatening because “sentience” is not unique to humans and because “sentience” does not necessarily imply sharing resources or social status.

We favor the term “artificial sentience” for the following moral reasons:

- “Artificial sentience” connotes the aspect of artificial entities’ minds that we believe is critical to moral consideration. Affective components of mind like sentience are linked to increased moral consideration. Cognitive components of mind like memory are typically associated with the capacity to take moral action.[5] We believe that this may be an important distinction for moral consideration given the potential need to distinguish between future “digital minds” who have some degree of sentience and “digital minds” that are cognitively sophisticated but devoid of experiential capacities.

- “Sentience” is the psychological feature most clearly related to the moral consideration of nonhumans. Specifically, “sentience” has a clear track record in nonhuman animal welfare science and advocacy for moral consideration and has some backing within AI ethics. “Consciousness” has a more convoluted relationship with moral consideration.

- There are clear consequences of sentience dismissal for humans (e.g., the Atlantic slave trade) and nonhumans (e.g., factory farming). The capacity to suffer is denied with sentience dismissal, facilitating exclusion from the moral circle. “Artificial sentience” is likely to be critical for recognizing the moral status of sentient artificial entities in a way that consequential combinations like “artificial consciousness” are not.

Reasons against “artificial sentience”

- The use of “artificial” may lead to some associations with “fakeness” or “unnaturalness.” This could be a concern if it leads to the dismissal of artificial entities’ capacity to have so-called “real” experiences.

- “Artificial sentience” may be more threatening than terms like “non-biological sentience”[6] if people associate “artificial” with being constructed in a laboratory for nefarious purposes or if they associate “artificial intelligence” with dangers outside of human control.

- The use of “sentience” is sometimes conflated with “sapience,” or conceptions of exaggerated intelligence, wisdom, and reasoning. This could be a concern for promoting understandings of what artificial sentience entails.

- “Artificial sentience” is new and unfamiliar to the general public and many experts. This could lead to some initial confusion. The longevity of the term could also be questioned. Given that it is new, we cannot yet know whether or not it will endure meaningfully into the future.

- “Artificial sentience” has some philosophical overlap with “artificial consciousness” that may increase confusion over the meaning of the term when used in interdisciplinary or transdisciplinary contexts. This overlap may also mean that some critiques of “artificial consciousness” may apply to conceptions of “artificial sentience” that are too broadly defined (e.g., broader than the capacity for positive and negative experiences).

- The connection between “artificial intelligence” and “artificial sentience” might create a spillover of hype from AI. Ungrounded excitement could prompt increased efforts to develop artificial sentience without enough forethought and preparation for a world with sentient artificial entities who may or may not receive moral consideration.

- “Artificial sentience” is likely to require evidence of the presence of a number of relevant features (e.g., those related to detecting harmful stimuli, behavioral avoidance, centralized information processing) that may enable us to make judgments about the likelihood and degree of sentience. This could enable a probabilistic approach for operationalizing “artificial sentience” that might increase our chances of correctly judging whether an artificial entity is sentient (advancing the moral consideration of artificial entities). However, having to calculate the probability of artificial sentience may make it easier for some to dismiss the existence of “artificial sentience” given that it may reduce confidence in the concept by providing too much evidence.

Using multiple terms

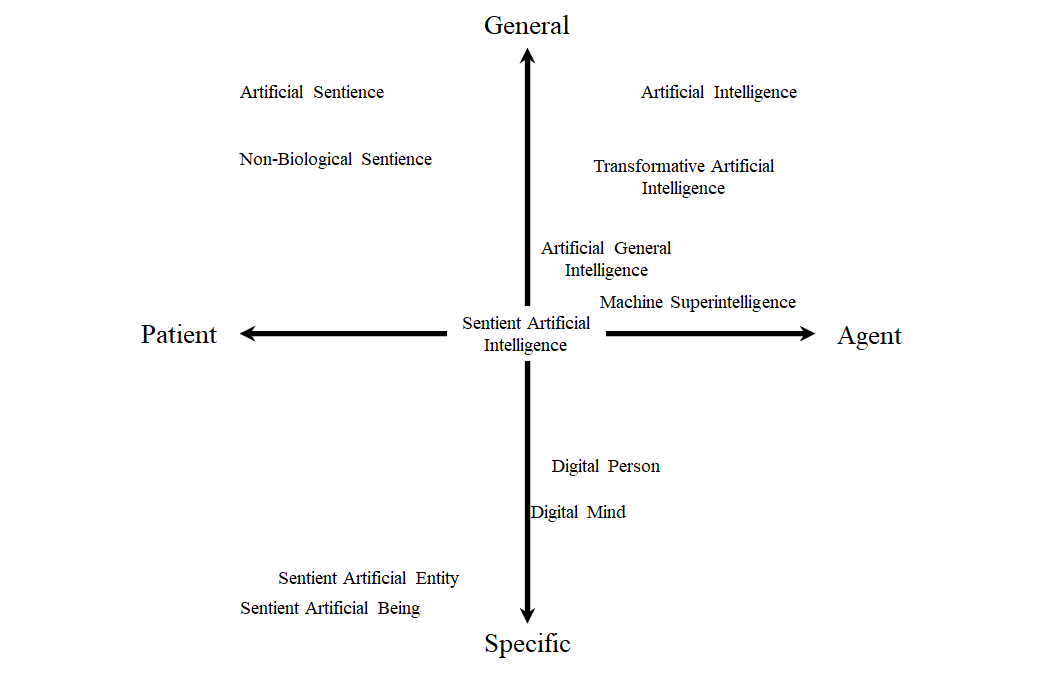

The best strategy might be to use multiple terms to represent the interests of algorithmically-based, at least partly non-biological entities, of which artificial sentience (AS) may eventually be considered an umbrella term. Below is a possible initial taxonomy of terms based on their relationship to the moral referents of patiency and agency and to the specificity of the term.

Figure 1: Possible Taxonomy of Terms

Note. Terms are positioned along two (of many possible) dimensions. Moral references are on the x-axis and specificity is along the y-axis. These locations are imprecise as they reflect cultural and intellectual associations that are likely to vary across readers and change over time.

Possible uses for specific terms:

- “Sentient artificial being” and “sentient artificial entity” are likely to be useful for communicating about individual AIs with non-experts or in discussions where the emphasis is on sentience rather than intelligence.

- “Sentient artificial intelligence” (“sentient AI”) is likely to be useful for communicating with experts and non-experts about individual AIs with some degree of sentience or the capacity for sentience in AI.

- “Digital mind” is likely to be useful for emphasizing internal, algorithmic mental capacities rather than substrate-based material structures.

- “Digital people”[7] is likely to be useful when referring to the digital nature of human descendants and the sorts of societies they may live in.

Appendix: Terminology defining relevant fields of study

Some terms define the academic fields of study surrounding artificial entities. The fields described below seem likely to have the greatest impact on long-term outcomes of artificial entity development such as how they will be designed and the resulting ethical implications.

Table A1: Fields of Study

[1] We consider terms and their connotations in the English language. The usage and meaning of some terms is likely to be different in other languages, a topic that requires future study. Linguistic differences may have global implications for the moral consideration of artificial entities that have yet to be examined.

[2] Blascovich and Ginsburg (1978) discuss conceptual ambiguity in regards to the social psychological study of “risk-taking.”

[3] See the Appendix for a list of terms defining relevant fields of study.

[4] See the Appendix for a list of terms defining relevant fields of study.

[5] Summarizing from Gray et al. (2007) and Gray et al. (2012).

[6] A term like “non-biological sentience” might contrast better with “biological sentience,” increasing the focus on “sentience” rather than on the entity’s material features and substrate (i.e., whether they are a human, a nonhuman animal, algorithm, or machine). Reducing the emphasis on substrate may facilitate attempts to increase the moral consideration of artificial entities.

[7] See Holden Karnofsky’s Cold Takes blog for more on “digital people.”